Rock, Paper, Scissors and Floppy Disks

This article will be published in “Archive 2020. Sustainable archiving of born digital cultural content”, edited by Annet Dekker. It features articles from a.o. Gabriele Blome, Anne Laforet, Caitlin Jones, Aymeric Mansoux, Lizzie Muller, Martine Neddam, Marloes de Valk and Gaby Wijers.

The version published here is the pre-typeset version, licensed with the Free Art License.

Rock, Paper, Scissors and Floppy Disks

Anne Laforet, Aymeric Mansoux, Marloes de Valk

How can artists contribute to an archive in progress? The problems software art archives have to deal with are immense, and are often linked to digital preservation problems faced on a global level: storage media change and age, and formats, operating systems, soft- and hardware are updated or discontinued Digital technology is evolving extremely rapidly and there have been very few strategies to help preserve data for future generations who will be involved in its development[1]. Solving these global problems is well beyond the reach of the individual artist, but there are strategies worth considering that prolong the life of an artwork and aid in the development of long-term solutions to these problems. Using Free/Libre/Open Source Software (FLOSS) to create work and publishing it using a copyleft license is one of those[2]. It seems simple: having access to an artworks source code, and the source code of the software it depends on, greatly increases the possibilities of maintaining it and keeping it operational. But the choices an artist faces when producing a work, the choices an archivist faces when maintaining it, and the tendencies within the world of software development – corporate and FLOSS alike – all impact on the eventual lifespan of a work. Are those artworks that are created using FLOSS that are published with their source code better suited for preservation than other software art? Or is it best to forget about digital media all together and stick to rock, paper and scissors?

Hard software

Digital art is stored and developed on an extremely short-lived medium, and requires a constant migration process[3] to avoid losing data. This can be said of any medium, of course, but in the digital realm, a single fragment of corrupted or missing data (bit rot) results in the entire file becoming unreadable. This is a major difference with analogue media. The run for new digital storage solutions keeps on accelerating. Every week new progress is made, making the previous medium obsolete. Though commercially a very attractive strategy, it is lethal for the conservation of data. It makes one wonder, not without irony, if writing down source code on paper, even in the age of so-called “cloud storage”, is not one of the most secure ways to safely preserve information.

But even writing down source code on paper does not safeguard a work of art. Hardware is only one part of the story. Software problems are just as detrimental. Software decay[4], mostly happening through planned obsolescence[5], affects software art in two ways. The external software or framework, not written by the artist, will be superseded sooner or later and even though backward compatibility of an API[6] or file format is often advertised or aimed for, it always has practical limitations. Furthermore, the history of digital computing and media is still young and there is not enough knowledge about the viability of current strategies to work around obsolescence in the long term. Modern computing is already based on many legacy systems and it is highly questionable if such practices can be extended forever[7]. As such, there is no miracle cure for software decay.

There is an option that will at least limit the extent of necessary data migration: the implementation and use of open standards[8] Using open standards contributes to the interoperability between software and is the safest choice when it comes to long-term availability[9]. Interoperability simplifies combining different software, resulting in less need for converting data to other formats[10] The most obvious example of open standards is the Internet, which would be a collection of inaccessible and incompatible parts without them. Unfortunately, not all open standards are equally successful. They need to be widely adopted in order to survive, but this is not easily achieved. The greatest obstacle is the reluctance of software companies to adopt them, a result of the short-term commercial benefits of avoiding backward compatibility and interoperability, forcing users to keep purchasing and upgrading software. The awareness of the need for open standards is rising and even though they do not solve the entire problem of data preservation, they do contribute to a long-term solution.

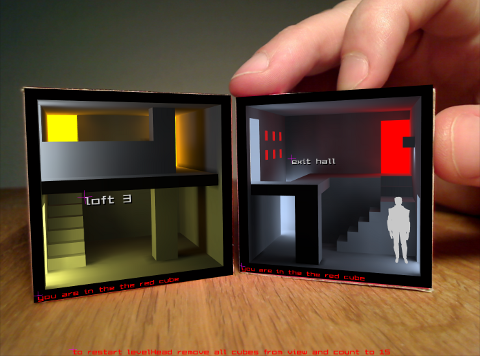

Illustration 1: Levelhead by Julian Oliver.

Choice of framework

During the last decade, there has been a massive development of artistic software, presenting artists with an abundance of production environments to choose from. Selecting the right one is essential when it comes to the lifespan of a work. Choosing a FLOSS framework, such as the GNU/Linux operating system and an open source programming language, creates a transparent environment, allowing access to all layers of software that enable an artwork to function (operating system, software, libraries, etc.). The combination of the transparency of the framework of a work of art and the freedom to copy and modify all parts of it – given the artwork carries a copyleft license – creates a situation where upgrading, adjusting and even emulating becomes much easier compared to reverse engineering an artwork distributed as a binary on a proprietary platform.

However, not all FLOSS frameworks provide the same degree of flexibility and scalability needed for long-term maintenance. Some software is based on platforms that were meant as an educational environment instead of a production framework. Their specifications changed occasionally or they simply took a long time to mature, such as, for example, Processing[11]. The consequence of this is that the artist has to update his code frequently to make it work with the latest version of the platform, not to mention other likely headaches when looking at lower level software dependencies[12]. Other artistic software is produced by a microscopic community or even an individual programmer. These projects are relatively small efforts, putting the work created with it in a very fragile position. Unlike more popular software and languages, they are not backed up by an industry or a community demanding stability. The software only works under very specific conditions at a very specific time. Migrating such a work is a tremendous task, likely to involve the porting of a jungle of obscure libraries and frameworks.

The decision for a certain framework does not only impact the lifespan of a work, but, of course, has a major influence on the creative process of the artist[13]. The choice for a less popular but artistically very interesting programming language is a very valid one and can be worth the additional effort required to keep the work alive. More awareness about these issues is needed so that the choice of framework can be made in an informed way.

Data sources, capture systems and lawyers

As if it was not already difficult enough to preserve a given piece of software art, some works that are not stand-alone require external sources of data to process. Typical examples are works that use, abuse or mock the content and data generated by Web applications and other Internet-based social networks[14]. Depending on the data used, it is highly likely that the source is ephemeral. In such a case a capture system is needed that samples this data, so that the artwork can still be shown even when its source of information no longer exists. This seems like a simple solution, and is without doubt facilitated by open standards, but it is hindered by the prevailing licensing and copyright jungle.

Thanks to a general effort to raise awareness about proprietary technology and copyright issues, there are a growing amount of FLOSS and open standards that are simplifying the way network software communicates, distributes, and stores its data, creating greater freedom for its users. Unfortunately a consequence of this effort is a rather ugly bureaucratic monster that is now very hard to avoid: the licensing of this data with at least a dozen different licenses to choose from[15].

At this moment, if someone’s network data is not already owned by corporate groups, users are left to choose a certain license under which their data can be published, and in some cases this choice is already made for them, without them having the possibility to change it. In this increasingly complex legal construct, using corporate- or privately owned data as part of a work of art and capturing this data for archival purposes can be a rather painful process. This flood of licenses introduces the risk of creating an unworkable situation in which archiving can only be done by lawyers and becomes almost impossible. When creating an artwork that uses external data, a certain amount of selection and research is needed prior to its production and release to avoid being limited to the use of artificial, locally generated data when exhibiting the work in the future, unless , of course, the work is meant to be as ephemeral as its data sources.

dpkg –install art.deb: refactoring and porting

At some point maintaining a work in its original environment becomes impossible because the technology has changed so much that there is no common ground with current software practices. We already see the rapid evolution of computing pushed by “cloud” and mobile technology, and we could also very well argue that the Internet, as we know it, will eventually evolve into something else or simply disappear.

A current solution to this issue is to start a migration process in order to port and re-factor the code so it can run on another, more recent platform. In this case the maintainer or archivist of a work does not wait for a technology to become obsolete, but instead keeps transforming the work so it remains fully functional on the latest environments while providing the same “artistic features”. For example, if the work is a software that generates prints of rotating squares every 2 seconds, and if it is clear that the artist considers the output – and not the processes that generate it – to be his art, it does not matter which code is used. The advantage of FLOSS is obvious here, if you can actually read the original code and understand it, you can maintain, update or port it. Of course the process behind printing a rotating square could be easily emulated or rewritten from scratch[16] but there is obviously a large body of software-based works that would be very difficult to reproduce without access to the source code[17].

Looking at how software is maintained and ported in a free software GNU/Linux distribution such as Debian[18] it becomes clear that the idea of “packaging” software artworks does have its advantages. First of all, all the changes made to the code are traceable. All the interventions required to have a working software are available as patches that are applied to the original source code as provided by its author(s). It is not a destructive process; the notion of archiving and logging changes and documentation are clearly embedded in the maintenance. Also, the software exists in two forms, its source package and its binary package, in a way that is meant to simplify its distribution and maintenance simultaneously over several different platforms. Finally, the possibility to contribute to the packaging makes it a distributed community effort[19]. Even if it is highly speculative, could we envision a community-driven, distributed maintenance system for free software art and free cultural works[20]? Would it be possible to develop an “art” section in the Debian project, and how close could software art be linked to the distribution of a free operating system?

Virtualization… or emulation?

Another approach to archiving software art is virtualization[21]. It is an increasingly popular method[22], which aims at preserving and running the original code written by the artist. This implies that the original system must be left pristine because of the extremely fragile and very specific chain of software dependencies used by the artist. As a result, the maintenance shifts away from the artwork itself towards the system supporting it. This is not simplified maintenance, however; on the contrary, in the long term the maintenance of these virtual machines just postpones the problem. A virtual machine is also just software that decays and needs either code migration or emulation to work on new hardware and software. It is still unclear what will be most efficient: porting and refactoring the code of individual artworks or doing the same for virtual machines. Virtual machines are more complex but could, in theory, run different works of art. Artworks are often more simple, and have such specific requirements that most could need a dedicated virtual machine to run them. More research is needed in this area in order to invest in the right approach, especially since they all differ so much, making it hard to share efforts.

The goal of virtualization is to leave the artist’s code, or in some cases its compiled form, untouched, and it is clear that if the work is based on FLOSS one can more easily build the virtualization needed to run it. But is this essential? If one can provide contextual documentation and a reliable emulation[23] in addition to the original code provided for future critical code studies, this provides enough valuable data for historical purposes. Besides, works in which the code is more important than its execution or interpretation on a machine, are always displayed… simply as text[24].

use Poetic::Violence;

# Software for the aggressive assault on society.

# Thank GOD It's all right now — we all want equality —

use constant EQUALITY_FOR_ALL

=>

"the money to be in the right place at the right time";

use constant NEVER = 'for;;';

use constant SATISFIED => NEVER;

# It's time to liposuck the fat from the thighs of the bloated

# bloke society—smear it on ourselves and become invisible.

# We are left with no option but to construct code that

# concretizes its opposition to this meagre lifestyle.

package DONT::CARE;

use strict; use warnings;

sub aspire {

my $class = POOR;

my $requested_type = GET_RICHER;

my $aspiration = "$requested_type.pm";

my $class = "POOR::$requested_type";

require $aspiration;

return $class->new(@_);

}

1;

# bought off with $40 dvd players

sub bought_off{

my $self = shift;

$self->{gain} = shift;

for( $me = 0;

$me {gain});

die "poor" if $Exploit

=~ m / 'I feel better about $me' / g;

}

foreach my $self_worth ( @poverty_on_someone_else){

wait 10;

&Environmental_catastrophe (CHINA,$self_worth)

}

}

# TODO: we need to seek algorithmic grit

# for the finely oiled wheels of capital.

# Perl Routines for the redistribution of the world's wealth

# Take the cash from the rich and turn it into clean

# drinking water

# Constants

use constant SKINT => 0;

use constant TOO_MUCH => SKINT + 1;

# This is an anonymous hash record to be filled with

# the Names and Cash of the rich

%{The_Rich} = {

0 => {

Name => '???',

Cash => '???',

},

}

# This is an anonymous hash record to be filled

# with the Price Of Clean Water

# for any number of people without clean water

%{The_Poor} = {

0 =>{

#the place name were to build a well

PlaceName => '???',

PriceOfCleanWater => '???',

Cash => '???',

},

}

# for each of the rich, process them one at a time parsing

# them by reference to RedistributeCash.

foreach my $RichBastardIndex (keys %{The_Rich}){

&ReDisdributeCash(\%{The_Rich->{$RichBastardIndex}});

}

# This is the core subroutine designed to give away

# cash as fast as possible.

sub ReDisdributeCash {

my $RichBastard_REFERENCE = @_;

# go through each on the poor list

# giving away Cash until each group

# can afford clean drinking water

while($RichBastard_REFERENCE ->{CASH} >= TOO_MUCH){

foreach my $Index (keys @{Poor}){

$RichBastard_REFERENCE->{CASH}—;

$Poor->{$Index}->{Cash}++;

if( $Poor->{$Index}->{Cash}

=>

$Poor->{$Index}->{PriceOfCleanWater} ){

&BuildWell($Poor->{$Index}->{PlaceName});

}

}

}

}

Illustration 2: Class Libary by Graham Harwood.

Documentation

In addition to the choice of framework and data sources, artists can greatly enhance the chances of their work surviving the test of time by documenting their work carefully. In the case of digital art involving code, writing clear technical documentation will help future attempts at turning it into operational software. It goes without saying that this process will be facilitated even further by working with a solid, revision-controlled code repository[25], and licensing that code in such a way that copying, changing and redistributing it will not encounter any bureaucratic obstacles. Furthermore, contextual and artistic documentation[26], through text, video and images, is of immeasurable value. Knowing what results to aim for when rescuing non-functional software helps, and if in a not-too-distant future, worse came to worse, those traces might be the only remaining vestiges of a work.

Documenting artworks is a very valuable practice[27], and greatly benefits access to works of software art, as many such artworks are known only through their documentation. This aspect of preservation certainly requires more attention, and the media art distribution circuit is part of the problem. Festivals push artists to produce new works with topical themes and criteria that limit the submission of works to those produced no longer than one year ago. Many artworks are only shown once or twice, which does not encourage proper preservation and documentation.

Conclusion

Not only artists, but also cultural institutions and funding bodies benefit from the use of FLOSS and copyleft. Investing in a free artwork is a sensible public investment that goes against the financially driven art market by directly encouraging the production of works in which the knowledge and technology used to create it are made public. Also, even though an artwork’s source code is central to the process of its preservation, it is not current practice for art galleries and artists to include it when a public or private collector acquires an artwork. With a free artwork, it is a given that its distribution is both desirable and possible.

However, the promotion of free cultural content is not trivial. Free cultural content is described with terminology that often leads to misunderstandings and disagreements, both within the “free culture” communities and beyond them. The recent “Public Domain Manifesto”[28] has been criticized for its choice of intellectual framework by, among others, Richard M. Stallman, the president of the Free Software Foundation[29] The failure of initiatives such as these to find common ground does not help convince people to critically examine the impact that patents, digital rights management (DRM) and copyright have on culture and its preservation.

Footnotes

[1] Such as the International Research on Permanent Authentic Records in Electronic Systems (InterPARES) project. http://www.interpares.org

[2] Copyleft is a form of licensing that gives others the freedom to run, copy, distribute, study, change and improve a work, and requires all modified versions of a work to grant the same rights. http://www.gnu.org/copyleft/

[3] Data migration is needed when computer systems are upgraded, changed or merged, and involves transferring data from one storage type, system or format to another.

[4] Data migration is needed when computer systems are upgraded, changed or merged, and involves transferring data from one storage type, system or format to another.

[5] Each piece of software or technology carries its own planned “death”. Planned obsolescence was first developed in the 1920s and 1930s. Bernard London coined the term in his 1932 pamphlet “Ending the Depression Through Planned Obsolescence”.

[6] An API (Application Programming Interface) is an interface that is used to access an application or a service from a program.

[7] Umberto Eco uses the phrase “lability of present time” to explain that, nowadays, the quick obsolescence of the objects we use, forces us to constantly prepare ourselves for the future. Carrière, Jean-Claude, and Umberto Eco, N’espérez pas vous débarasser des livres. Paris: Éditions Grasset et Fasquelle, 2009.

[8] An open standard is “a published specification that is immune to vendor capture at all stages in its life-cycle” (definition by the Digital Standards Organization).

[9] Digital Preservation Management Workshop and Tutorial, chapter “Obsolescence: File Formats and Software”, developed at Cornell University. http://www.icpsr.umich.edu/dpm/dpm-eng/oldmedia/obsolescence1.html

[10] For example a digital artist or graphic designer can generate an image in the open Scalable Vector Graphics (SVG) standard using, for example, a Python script, then load this SVG file in a more high level editor such as Inkscape for further manipulation, and the final result, still as SVG, will be visible in a web browser such as Firefox.

[11] Processing, initiated by Ben Fry and Casey Reas, has its roots in “Design By Numbers”, a project by John Maeda, of the Aesthetics and Computation Group at the MIT Media Laboratory. http://processing.org/

[12] JAVA in the case of Processing.

[13] De Valk, Marloes, “Tools to fight boredom: FLOSS and GNU/Linux for artists working in the field of generative music and software art”, in The Contemporary Music Review, vol. 28, no. 1, 2009.

[14] For a study on the preservation of net art, see Laforet, Anne, Le net art au musée: stratégies de conservation des œuvres en ligne. Paris: Éditions Questions Théoriques, 2010.

[15] For more information about Free Culture Licenses, please visit http://freedomdefined.org

[16] One such example is Wayne Clements’ love2.pl installation, which simulates the emulation of the Love Letters programme by Christopher Strachey (1952) by David Link. The artist who reverse-engineered it from the emulated version.

[17] For example, Scott Draves’ Electric Sheep: http://www.electricsheep.org/

[18] http://debian.org

[19] Contributing is not simple and you have to follow Debian guidelines and policies relating to this, but the possibility and the infrastructure exist, for example, public bug trackers to collect the odd contribution, is a reality. Cf. Lazaro, Christophe, La liberté logicielle. Une ethnographie des pratiques d’échange et de coopération au sein de la communauté Debian. Brussels: Academia-Bruylant, 2008.

[20] Julian Oliver was once approached by a group interested in packaging Levelhead, see illustration 1, (http://julianoliver.com/levelhead) for Ubuntu. Also, GOTO10 has a long history of distributing software art as part of their live distribution Puredyne (http://puredyne.goto10.org/), such as the “ap0202”, “cur2” and “self3” software by Martin Howse/xxxxx (http://www.1010.co.uk/).

[21] Virtualization refers to the abstraction of computer resources (http://en.wikipedia.org/wiki/Virtualization) and is an umbrella term. The most common forms are application virtualization, which encapsulates an application from the underlying operating system on which it is executed, and platform virtualization, performed on a given hardware platform by host software (a control program), which creates a simulated computer environment, a virtual machine, for its guest software.

[22] Research projects such as CASPAR (http://www.casparpreserves.eu/) or Aktive Archives (http://www.aktivearchive.ch/) include virtualization as a preservation strategy.

[23] Emulation is the imitation of the behaviour of a particular software or hardware by other software or hardware. Emulation is often seen as the ideal solution for preserving digital artworks (see, for example, Rothenberg, Jeff, Avoiding Technological Quicksand: Finding A Technical Foundation For Digital Preservation. Council on Library and Information Resources. Washington. http://www.clir.org/pubs/reports/rothenberg/contents.html. For an example of the emulation of a digital artwork, see Dimitrovsky, Isaac, Final report, Erl-King project. Variable Mediable Network. 2004. http://www.variablemedia.net/e/seeingdouble/report.html

[24] For example, Graham Harwood’s “Class Library”, see illustration 2 (http://www.scotoma.org/notes/index.cgi?ClassLib) and Pall Thayer’s “Microcodes” (http://pallit.lhi.is/microcodes/)

[25] Version control enables the management of changes to software and documents. See also Yuill, Simon, “Concurrent Versions Systems”, in Fuller, Matthew (ed.), Software Studies. A Lexicon. Cambridge, MA: The MIT Press, 2008.

[26] Depocas, Alain, Digital Preservation: Recording the Recoding. The documentary strategy. 2001. http://www.fondation-langlois.org/html/e/page.php?NumPage=152

[27] For a discussion about the role of documentation by artists, especially with regard to conceptual art, see Poinsot, Jean-Marc, Quand l’œuvre a lieu. L’art exposé et ses récits autorisés (nouvelle édition revue et augmentée). Dijon: Presses du reel, 2008.

[28] The manifesto was produced within the context of COMMUNIA, the European Thematic Network on the Digital Public Domain, in 2009.

[29] Stallman explains on his blog why he will not sign the manifesto: http://www.fsf.org/blogs/rms/public-domain-manifesto