Within a computer network such as the Internet, communication between individuals are heavily mediated by software. Whether if this one is run locally or as a remote service, this mediation works by constantly generating new, or manipulating existing data. Our ability to properly respond to this discrete flow of digital information is therefore modulated by two factors: the amount of data to consume, and the time allocated for this consumption and interpretation.

The amount of data that is available today is gigantic. This volume and its quasi physical properties is at the centre of commercial opportunities in the form of data markets, big data analysis, data brokerage, data mining, but it is also a fundamental component in citizenship driven initiatives such as the open data movement.

Next to that, the quest towards a real-time, continuous, consumption of digital data is forcing us to operate within an increasingly shorter analysis window. For instance, following your “friends” and acquaintances on numerous social networks is getting more and more complicated due to the number of different platforms, interfaces, protocols and contexts in which such an information can be accessed. In between a hundred status updates, a few times more in tweets or dents, a thousand posts pulled from syndicated feeds, or whatever data coming from any other new social software fad that guarantee the social emancipation of its oppressed consumers with relevant tracking and targeted advertisements, it becomes more and more difficult to take some time and therefore some distance from our digital diet.

Funnily enough, this very difficulty becomes in turn a new business opportunity in the field of digitally assisted procrastination. The latter is best exemplified with the blossoming trend for new exonerating software utopia that allow the postponing of today’s’ impossible reading of articles, doomed to be obsolete tomorrow. At the other end of the spectrum, pre-emptive action can take place in the complete delegation of the editorial process to an algorithm that can filter for its users the relevant “stuff” based on the constant surveillance of their habits, hence locking them up in an eternal paradise of relevant copypasta.

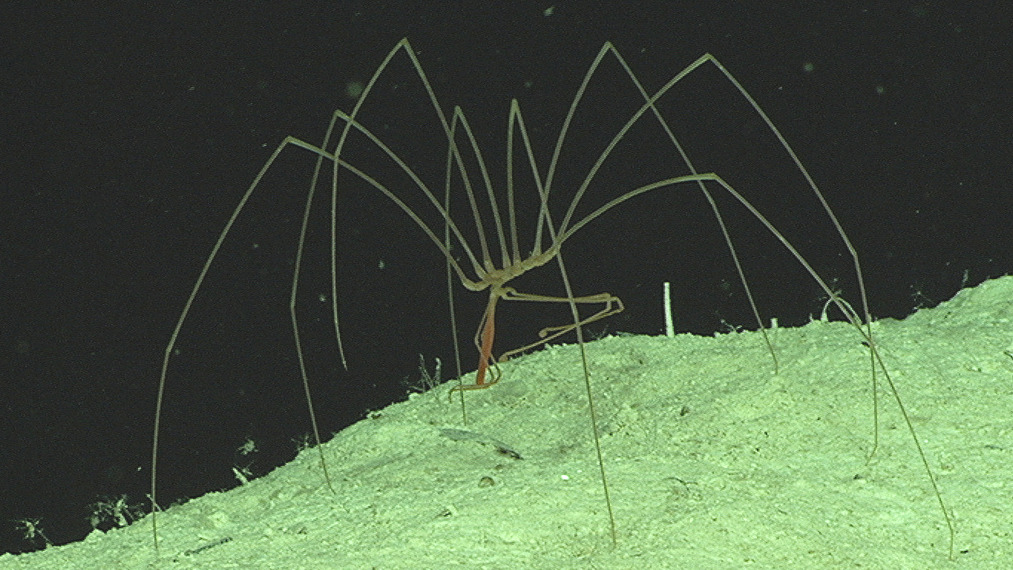

It is precisely because of these two issues, the astronomical quantities of data and the limited time allocated to consume them, that in order to be successfully digested, a block of information must be as simple as possible and at the same time, well, quite tasty. We should not be shocked that the true rulers of the Internet are therefore low resolution captioned cat photos. Indeed, to spread quickly and vastly, information must be efficiently compressed so as to take as little room as possible in order to optimise the little amount of memory allocated to it on the different software, hardware and meatware networks. It also needs to be remarkable and attractive in order to catch the attention of our digital reproductive organs, so that the legacy of the former can survive digital entropy, then go forth and multiply on as many networks as it is memely possible.

In terms of compression, a good example are the linguistic transformations in text messages that go in par with popular mobile communication inspired microblogging platforms such as Twitter. These also are fed back and forth into the slang of mainstream surface Web discussion groups as well as into Deep Web communities with their local accent and dialects. There are also some forms of compression that have the ability to modulate the interpretation of any given text, such as emoticons. Yet beyond the textual aesthetics of compression, we can witness today a growing use of data visualisation. Datavis, infovis, or whatever one might want to call it is today the ultimate aphrodisiac for information orgy. As graphic designers remind us, information is beautiful. It also gains popularity because it seems to allow the unfolding and the laying out of vast amount of information in a manageable, yet visually pleasant, form. Now, taking into account our increasing dependence on digital information to experience and interface with the world, that combined with a belief that an image is worth thousands words, the result is the artificiality of a society built on top of visual euphemisms, a collection of visual essays that depict what life is, once sampled and filtered through data vis algorithms or nostalgic Instagram instant noodles recipes.

We have all experienced this artificiality during the Fukushima Daiichi nuclear disaster tragedy. Not long after the catastrophic event in Japan, we have been the witnesses of an information flood, as if the one linked with the tsunami sadly triggered the many waves of visual euphemisms that took the networks by surprise. In a matter of days, we have been surrounded by graphics showing the radiation levels, the maps of terror, visualisation of earthquakes impacts in the region, as well as a whole series of illustrations and animations de-constructing the mechanics and statistics of the event, while extrapolating visually of what has yet to come from this tragic episode. Yet none of these efforts, regardless of their visual qualities, have managed to communicate the in-quantifiable horror. Worse, even though we all have participated in this infovis marathon, as consumers and producers, and despite our intentions, most of us are in fact unable today to explain precisely what happened outside of the realm of these visual representations. The visual euphemism has transformed and over shadowed the local experience of the event and all of us have gladly accepted the invitation to dance with simulacra.

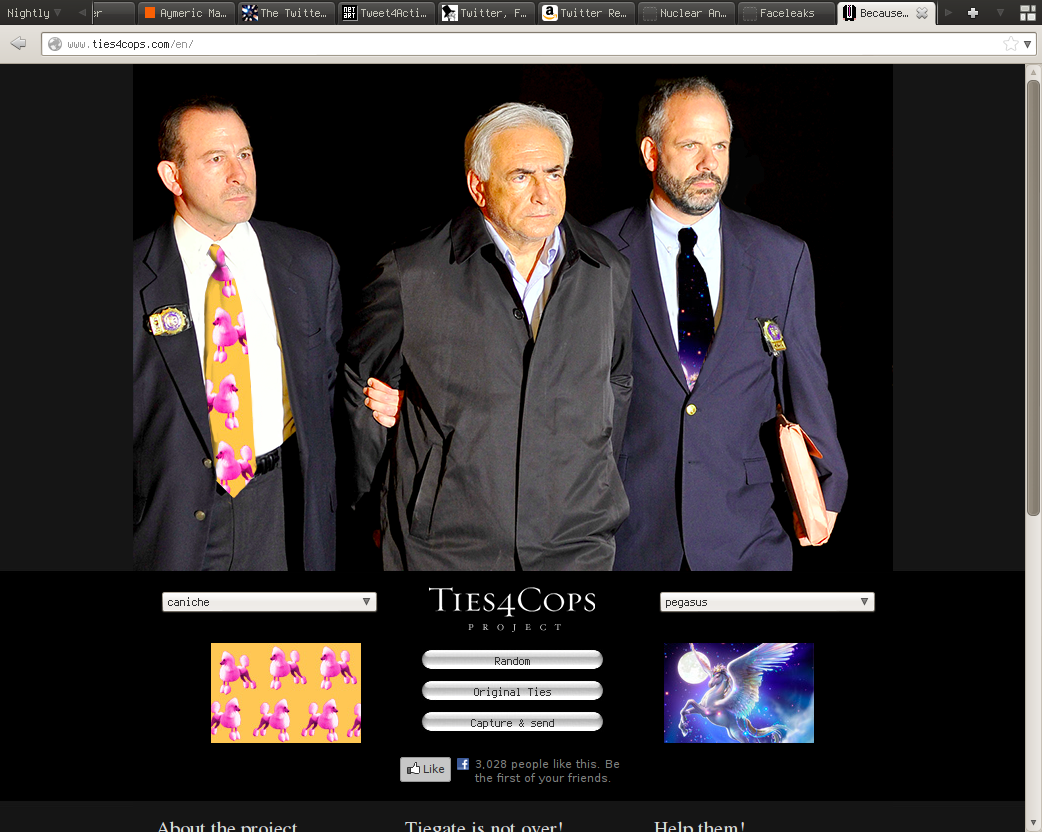

What about network friendly art you may wonder? In order to survive in this environment, art must find extreme strategies that will maintain its visibility when piped in between another tragic event, a couple of leaks and media glitch from a celebrity. For a start, it is perfectly possible for artists to follow the marketing wet dream of appropriating and cracking the recipe of viral creations. In this situation, the work aims at reaching the status of meme in order to maximise its visibility within social networks. The work and its documentation are produced to be easily copied, consumed and inserted on various social media platforms and the content is often a mix of cultural appropriation from diverse online niches, borrowing anything that can be found anywhere from image boards to pirate communities. Projects from the F.A.T. lab use this strategy to create micro interventions and small works adopting a “release early, release often” mode of production, testing ideas and literally brute forcing their projects on the net.

Sometimes, however, the production and dissemination of epitextual documentation, in reference to one of the sub-component of the paratext ideas from Gérard Genette, becomes more important than the work itself. This is what happened indirectly with the project “web 2.0 suicide machine“. While the project was only experienced by a very few, the idea behind it transcends its implementation. In the end the suicide machine only exist within an imaginary that is sustained by online videos, news items, articles and academic fantasy. It becomes information. Of course this aspect of art as information can be built directly as a function of the work itself. A good example of such manipulation can be found in the programmed launch and death of the site “Lovely-Faces” as part of the project “Face to Facebook“.

That said, one sometimes should be careful for what one wishes for. There is indeed an obvious risk for such works to end up in an uncomfortable metabiosis with the host they base their critique upon, or worse become yet another ephemeral content following another one in the tunnel of our short attention span. Eventually, such works can become involuntary agents of an exploitative apparatus, for which they operate as entertainment, interludes and sweet coating that help everyone swallow the bitter pill of control and surveillance on the surface Web. The challenge is therefore to break this flow and exit this close circuit. Maybe a way to achieve is in fact counter intuitive. Instead of focusing our attention and energy making works that are in fact ready-to-ship-and-to-consume content, we should work directly at the level of the container, in order to rethink networks not as mere support for information, but also as an artistic medium that is critical of these very modes of production and consumption. If this is ever the intention, today, of networked art to go beyond the visualisation, the virality and the lulz, its participants must start to work outside of the infrastructures and the context of the surface Web, instead of developing a symbiosis with the latter. It is always an option for artists to appropriate more than just media practices and online folklore. In that regard, topologies, protocols and infrastructures are very powerful artistic material.

Oh dear Deepnet art, I long for you. Take your time.

Extended version of a text originally published in MCD#69 | NET ART – WJ-SPOTS #2 : Les artistes s’emparent du réseau / Artists take over the network, edited by Anne Roquigny, Anne-Cécile Worms and Laurent Diouf. The publication is availabe for purchase here.

Sea spider photo CC-BY-SA by NOAA, Ocean Explorer.

Screenshots and text released under COPYPASTE License.